MCP Quality Benchmark

MCP Quality Benchmark

MCP responses were reaching 30–40k tokens per query, inflating cost, slowing generation, and degrading retrieval accuracy. I ran an 8 MCP config benchmark to determine the optimal metadata format and chunking strategy. The winning configuration achieved 92% accuracy and reduced token consumption by 35%, informing the new MCP ingestion standard.

Role

Lead Designer & AI Systems Architect

Project Type

Design System AI Infrastructure

Team

Led end-to-end with 1 engineer + 1 DT partner

Contribution

Benchmarking strategy, implementation, analysis, and final recommendation

Tools

Cursor, Claude, MCP, React, TypeScript, Anthropic API, GitLab

Duration

2 months

The Problem

The MCP generated 4,000+ prototypes, but it ballooned to 30–40k tokens for trivial queries. That created cost bloat, latency, and reduced retrieval accuracy. No one knew whether the root issue was related to the metadata format, chunking strategy, or infrastructure. Without clarity, we could not standardize AI input or scale reliable prototype automation.

This MCP performance issue directly causes:

- High token consumption

- Increased cost

- Context window pressure

- Signal-to-noise ratio

- Latency impact

Decision and Outcome

Which MCP configuration produces the highest quality input?

Experiment A and Semantic Chunker both performed well, but Semantic Chunker offers the best value; It achieved the highest retrieval accuracy of 92%, while reducing token consumption by 88% compared to the in-production baseline.

At 4,000 prototypes per month (~240,000 queries annually), this translates to approximately $12,000 in annual savings while improving retrieval accuracy by 3 points. Semantic Chunking JSON is now the standard for MCP ingestion.

| Rank | Configuration | Token Savings | Cost Savings | Retrieval |

|---|---|---|---|---|

| 1 | Semantic Chunker Chunking at ingestion | 88% | $0.05/query | 92% |

| 2 | In-Production Baseline | Baseline | Baseline | 89% |

| 3 | Experiment A Pre-chunked JSON | 87% | $0.05/query | 86% |

The Method

Cloned the in-production MCP repository

Generated metadata for each MCP experiment

Ingested each MCP with the new data locally

Verified all 8 MCP configurations running

Created 8 MCPs with distinct metadata pipelines, ran each under identical prompts, and executed them on isolated local ports to remove cross-contamination. Learn more about AIMS pipeline here.

8 MCP Configurations, Same Knowledge:

- In-Production MCP - Monolithic and verbose JSON

- Semantic Chunker - Optimized MCP ingester (Infra level)

- Experiment A - Pre-chunked and monolithic JSON

- Experiment B - Original documentation MDX for humans

- Experiment C - Only MD

- Experiment D - Hybrid MD + JSON

- Experiment E - Domain-separated JSON

- Experiment F - TOON (Token-Oriented Object Notation)

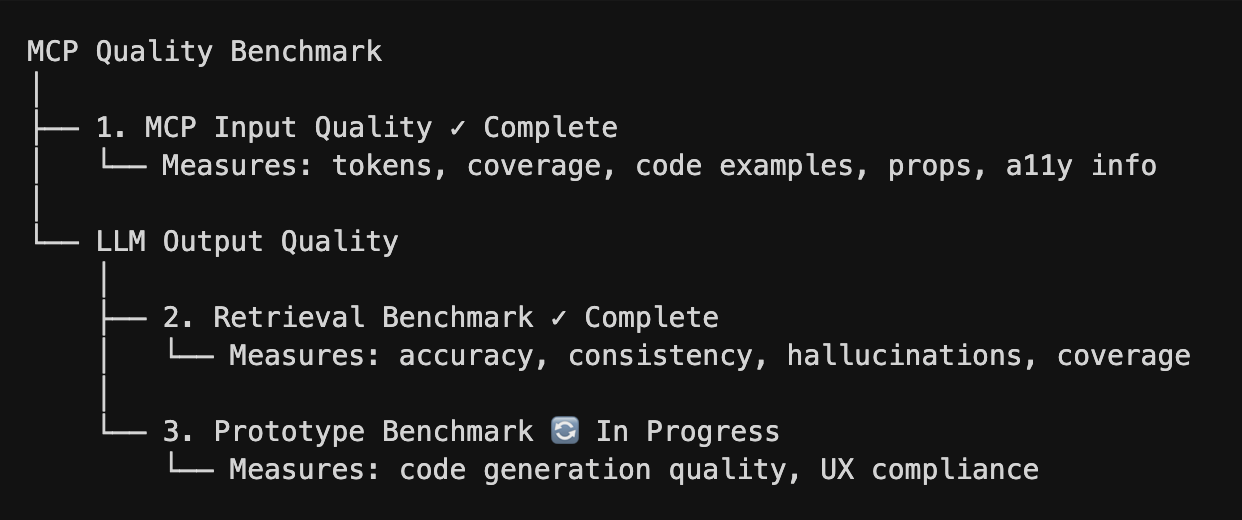

The Framework

Why this matters: benchmarks are meaningless without a quality definition. I defined three dimensions because "Quality" isn't one thing. It's three things in balance:

- MCP Input Quality — Is the MCP optimized for LLM reasoning?

- LLM Output Quality Retrieval — Can the LLM find correct information?

- LLM Output Quality Prototype — Can the LLM produce Indeed code?

Benchmark Evidence

Structured. Chunked. Smart. Coherent.

- Finding: Top performers (Semantic 92%, Exp A 86%) both use structured JSON formats.

- Finding: Both top performers use chunking, semantic, or pre-chunked, and deterministic JSON. Chunked > Monolithic.

- Finding: Semantic chunking achieved 92% coverage vs. pre-chunked JSON at 86%, same format, different chunking strategy.

- Finding: Domain-separated (Exp E) achieved only 20% retrieval coverage; information was there, but siloed. The hybrid format (Exp D) hit 27% completion; the LLM couldn't even finish.

Next Steps

This research extends beyond a single benchmark. The findings inform:

1. Prototype Benchmark: Complete full quality framework evaluation before production rollout.

2. Mobile Design System Documentation for MCP: Implement semantic chunking for the Mobile DS MCP.

3. Design System AI Infrastructure: Work with AI leadership to integrate MCP ingestion standards into long-term AI platform strategy.

Prompt → Prototype

Prototype benchmark is the missing piece. Over the next weeks, I will complete the full framework. Stay tuned!

I design systems that both humans and AIs can understand, trust, and build on.

Selected Work